Zero-shot animal behaviour classification with vision-language foundation models

May 20, 2025·,,,,,·

0 min read

Gaspard Dussert

Vincent Miele

Colin Van Reeth

Anne Delestrade

Stéphane Dray

Simon Chamaillé-Jammes

Abstract

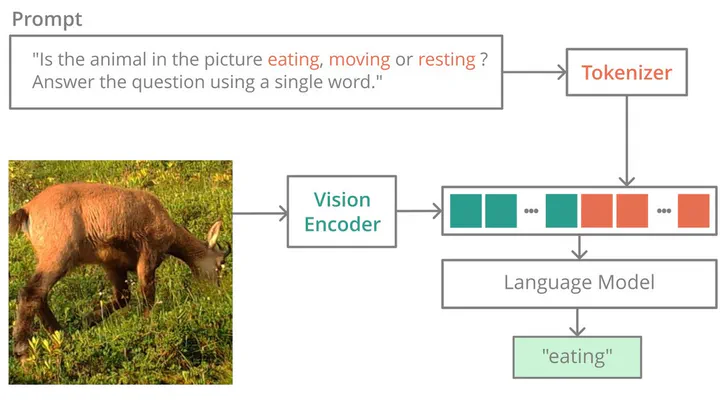

Understanding the behaviour of animals in their natural habitats is critical to ecology and conservation. Camera traps are a powerful tool to collect such data with minimal disturbance. They however produce a very large quantity of images, which can make human-based annotation cumbersome or even impossible. While automated species identification with artificial intelligence has made impressive progress, the automatic classification of animal behaviours in camera trap images remains a developing field. Here, we explore the potential of foundation models, specifically vision-language models (VLMs), to perform this task without the need to first train a model, which would require some level of human-based annotation. Using two datasets, of alpine and African fauna, we investigate the zero-shot capabilities of different kinds of recent VLMs to predict behaviours and estimate behaviour-specific diel activity patterns in three ungulate species.By comparing our predictions to behaviours annotated by participatory science, our results show that using these automatic methods, it is possible to achieve F1-score as high as 86.39% in behaviour classification and produce activity patterns that closely align with those derived from participatory science data (overlap indexes between 84% and 90%).These findings demonstrate the potential of foundation models and vision-language models in ecological research. Ecologists are encouraged to adopt these new methods and leverage their full capabilities to facilitate ecological studies.

Type

Publication

Methods in Ecology and Evolution